Illustration: Gordon McAlpin

It's almost certain that the connection between the monitor and the computer is one of three types of cables: VGA, HDMI, and DVI. So what's the difference?

VGA is the oldest of the three, having been introduced in 1987. VGA only handles video and not audio, contains no security or digital rights management, and is analog signal which means the quality of the cable, the quality of the pin, and the distance from the computer to the monitor can all have an effect on video quality. If the connector has little thumbnails next to the cable, and looks like the one on the left graph, it's VGA.

HDMI, which first appeared in 2003, is often found on modern TVs, but also on most modern computer monitors, and quickly becomes essential equipment on most laptops. HDMI is a digital standard, which means the connection is either on (1) or off (0). The quality of the cable, the distance from the machine to the monitor, and the type of metal in the connector all make little to no difference (so don't just pay a lot for your HDMI cable!) HDMI can also handle security, meaning that certain types of signals, such as pay TV, can be blocked from traveling along the HDMI cable. HDMI handles audio as well as video, so a monitor with a headphone jack and an HDMI cable can output audio from your laptop. HDMI looks like average graphics.

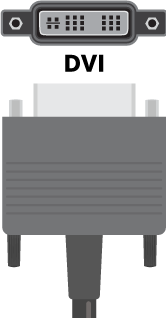

DVI was invented in 1999 and is similar to both VGA and HDMI. It can be configured for digital, analog, or both; it can work using digital rights management; and it can be converted to both HDMI and VGA using a cable or dongle converter (small device converter). More than likely, your graphics card has an HDMI and a DVI connector, or one or the other, and often comes with the required dongle. It doesn't support audio like HDMI does and looks like the picture on the right above.

Now you know the difference between each of these cable types, as well as the pros and cons of each. Consider yourself educated on video connectors!

We come across foreign abbreviations VGA and DVI when we consider monitors, TVs and video cards (or assembled computers, or motherboards). These designations belong in this context to interfaces and standards for connecting video equipment. True, there is a nuance: VGA is also a designation for the resolution of screen matrices, corresponding to 640x480. However, comparing DVI and VGA, we will consider just the connection and signal transmission interfaces. It should be said right away that active disputes on this topic came to naught a few years ago - technologies do not stand still, and the era of total HDMI is already approaching with might and main.

Definition

VGA- analog fifteen-pin interface for connecting monitors to video equipment, primarily to a PC.

VGA connectorDVI- digital interface for connecting monitors to video equipment. The number of contacts, depending on the specification, varies from 17 to 29.

DVI cable

DVI cable Comparison

The difference between VGA and DVI is already visible from the definitions - one interface is analog, the other is digital. Most inexperienced users know that we live in the digital era, so they automatically prefer DVI as a more modern interface. And there are reasons for this - digital technologies provide ample opportunities, finally displacing analog ones. However, this is all theory, but in practice it is sometimes impossible to distinguish where the picture is obtained via VGA and where via DVI, especially when monitors are removed from budget niches. Is it worth it to bother with abbreviations in this case?

Picture quality is, of course, higher on DVI. This is due to the fact that video cards are digital devices. The scheme of operation of VGA turns out to be something like this: a digital signal for transmission through VGA is converted to analog, and then to output an image - again to digital. For DVI, the chain is shorter: digit - digit, so the quality is not lost during the conversion. Also, the picture on VGA can be distorted due to external interference, while DVI does not have such a feature. It is worth remembering about the settings: DVI involves automatic correction images (control is also digital), giving directly to the user only a change in color and brightness settings in accordance with the characteristics of vision, lighting and tasks. VGA requires the user to clearly define what he wants, and bring the image in line with the desires on his own.

Physically, DVI and VGA connectors are completely different, but compatibility is ensured using adapters. Initially, VGA was intended for CRT monitors, and DVI in one of the versions (there are three in total - I, A and D) only supports CRTs. Another option is for numbers only, and one is universal.

Modern video cards or soldered to motherboards video outputs have already forgotten or practically forgotten about VGA, leaving this interface only to the most budget models. In addition, if it is present, it is almost always paired with DVI, so the user has a choice. But image output technologies with VGA interfaces are supplied a little more often - out of economy. Often, an adapter is added to the kit for such monitors. We are also talking about budget and small-format models. Mobile electronics, previously equipped with miniVGA connectors, has now almost completely switched to HDMI.

Findings site

- DVI - digital interface, VGA - analog.

- VGA involves double signal conversion.

- The picture quality of DVI is theoretically higher.

- VGA connection may suffer from external interference.

- DVI assumes automatic picture correction.

- VGA has 15 pins, DVI has 17 to 29.

- Today, VGA is the lot of budget models of monitors and video cards.

Connecting the monitor to other devices is carried out using various interfaces, which are currently plentiful. Depending on the technological solution, there are two types of connection options - analog and digital. The latter are represented by two main interfaces - DVI D or HDMI. What is better and what, in fact, is the difference between these technologies? In favor of which connector should you choose? The following will be discussed in more detail, the better HDMI than DVI.

Requirements of modern technology

To determine which connector is better - DVI or HDMI, it's worth understanding why you need to consider only these two connectors, because there are other interfaces, for example, DisplayPort or VGA. First, DisplayPort is used primarily to connect a monitor to a computer, providing a connection between multiple screens, but this interface is definitely not suitable for the HD format. VGA is currently considered an outdated solution; many leading companies have abandoned its use in their technology. By the way, it was thanks to VGA that the

But analog broadcasting is also taking a backseat today, as it clearly shows most of the picture's flaws. The device first converts to analog, and then vice versa. Unnecessary transformations of the picture leads to the fact that interference appears on the screen - common problem become bifurcations, copies of objects, buttons or text. These shortcomings are especially noticeable on the first modern liquid crystal monitors, which supported only VGA connection.

As for connectors, now most manufacturers place on rear panels Technician ports under both digital cables. But, as a rule, this only complicates the task when you need to decide which cable is better - HDMI or DVI.

Common features of interfaces

Both HDMI and DVI transmit video using the same technology called TMDS. The necessary information in this case is encoded in such a way as to obtain the most harmonious sequence of bits. Thanks to the latter, high level frequencies and, as a result, a better image.

In addition, only one cable is used for both one and the other port (despite the fact that HDMI is a single-channel solution, but DVI is a multi-channel solution, which will be discussed below). This is possible due to the use of special adapters.

Distinctive features

Many users personal computers make a choice in favor of Why is this so - the better HDMI? What DVI cannot boast of? There are two main differences:

- The HDMI cable can transmit not only video, but also sound signal. This provides high quality not only sound, but also pictures. Most DVI models do not have this feature, although of course there are exceptions to the rule.

- High-Definition Multimedia Interface is a single-channel cable, but the data transfer rate reaches one hundred megabits per second. But the products of the competing company are distinguished by several channels, one of which transmits an analog signal. For devices powered by such a signal, a DVI cable is a godsend. Thus, the company keeps up with the times, but does not ignore the needs of owners of equipment that cannot boast of innovative "stuffing".

To decide which HDMI is better than DVI, you need to understand that the image quality is more dependent on the device that needs to be connected. The picture that appears on the screen is affected by the signal level. But the stability and quality of image transmission is already provided by the cable.

But do not try to connect one cord in place of another, there are special adapters for this. Otherwise, you can simply lose the soundtrack. Although both interfaces work using the same technology, the differences make themselves felt.

High Definition Multimedia Interface

Where HDMI is better than DVI is that it is the High-Definition Multimedia Interface that is used by many companies that produce equipment. It is such a common interface that using hdmi you can connect not only TVs and monitors to computers, but also laptops, tablets and smartphones, game consoles and players.

The cable, consisting of only one channel, is still wide-format, allowing you to form a whole system from various multimedia devices. The latter is especially necessary in some cases.

New versions of the cable have excellent compatibility and easily replace previous models. HDMI has a good throughput, which is important for gamers or just those users who love high speeds and improved sound. At the same time, HDMI is completely innovative, it only supports digital format. That is, any old models of equipment cannot be connected using it.

Digital Visual Interface

The DVI interface has three varieties that support different modes - digital, analog, and analog-to-digital. This cable can transmit a picture with a high resolution at a distance of no more than five meters. Signal transmission can be carried out in two modes. The first is single link (single mode), the second is dual link ( dual mode). The latter provides work on high frequencies. So, in case of a poor-quality picture when using the single mode, it is the dual link that will correct the situation.

Competitive advantage

HDMI is the smarter interface anyway. Developers follow trends and keep up with the times. Most models of modern technology in this moment and will definitely use this type of cable in the near future.

HDMI or DVI for a computer, which is better to choose? In this case, you can use either one or the other interface. The final choice depends on the destination. If important quality sound, then it is better to use the first connector, in the absence of this need, DVI is also suitable for the connection. This type of interface is especially good because its developers, although they develop technologies, do not forget about those who use old devices. After all, quite a lot of users still have computers and TVs that are no longer “in trend”. It is in this case that it is better to stop at DVI.

If you look at the history of the development of technology, you will see that the development is proceeding at a rapid pace. Continuous development for improvement modern technologies. DVI is one of the most widely used video transmission technologies and has replaced VGA. Why DVI is better than VGA? This is the question I will try to answer in this article.

AT electronic devices signal quality is of paramount importance. In particular, audio and video cables and connectors must ensure that the signal is kept as original as possible. DVI and VGA connectors use two different signal transmission technologies, and are mainly used when connecting desktop computer with monitor.

Comparison of DVI and VGA

If you're wondering if you should switch to on your old computer, be sure to read this article to the end. Let's go straight to the differences between DVI and VGA, and start with the main differences in technology.

Main differences between DVI and VGA

VGA ( Video Graphic Array) — 15-pin connector for video signal transmission between devices. It is used when connecting computer monitors and HDTV TVs. This technology was developed by IBM in 1987.

These connectors carry the signal in analog form and are therefore suitable for CRT monitors, but not very well suited for LCD and other digital displays. The video signal is transmitted via a VGA cable. Being analog in nature, the VGA signal requires D/A and A/D conversion.

DVI( Digital Visual Interface) was developed by the Digital Display Working Group in 1999 as a successor to the VGA technology widely used at the time. It is designed to transmit digital signals in uncompressed form. DVI connectors and cables are designed to be compatible with and VGA. DVI connectors have 29 pins, with the ability to transmit analog and digital signals.

Resolution and image quality

The resolution and image quality offered by DVI is vastly superior to VGA for the simple reason that it is a digital signal. Digital signals are inherently immune to noise. The signal transmitted through the VGA cable may be distorted by ambient noise.

DVI connectors are ideal for digital display devices. Main difference between DVI and VGA adapters in that the technology does not require conversion digital signal to analog. Therefore, there is no quality loss during conversion. Also, DVI cables can transmit information over long distances without distortion (that is, a DVI cable can be longer than VGA).